What is the Demand for Integrating Qualitative Research into AI Systems for Defence Applications?

- Dr Stephen Anning

- Oct 20, 2025

- 9 min read

Introduction

A series of MOD policy documents reflects a growing recognition that truly human-centric Artificial Intelligence (AI) requires far more than well-curated quantitative data and powerful machine-learning algorithms. The requirement for more human-centred AI demands a deeper, more qualitative understanding of how humans think, decide, and work for intelligence analysis.

Human-centred ways of working with AI in intelligence analysis is the source document upon which these policies are based. It explains the value of qualitative research in capturing nuances of human knowledge, cognition, and situational complexities in intelligence work. This theme features in other Defence publications—such as Ambitious, Safe and Responsible AI, the Defence Artificial Intelligence Strategy, and JSP 936—that signal a theoretical need and a genuine demand for integrating qualitative research approaches into AI systems for Defence applications.

This document explains:

The underlying premise is that a human-centric application for intelligence analysis can only be ‘human-centric’ if the system incorporates approaches to qualitative research.

Key distinctions between qualitative approaches to assess AI applications and qualitative approaches to intelligence analysis.

How the core themes in Human-centred ways of working with AI in intelligence analysis manifest in other Defence documents, illustrating the breadth and depth of this demand.

By weaving these threads together, we see an evolving Defence ecosystem that seeks to ensure that AI does not operate in a purely mechanistic or reductionist manner, but is enhanced by a more holistic understanding of human contexts.

Context: The Rising Demand for Qualitative Research in Defence Applications

The Limitations of Quantitative-Only AI

Much of today’s AI in the Defence context relies upon collecting and processing large datasets. These datasets help uncover patterns, generate forecasts, or automate tasks that once required extensive human labour. However, “Human-centred ways of working with AI in intelligence analysis” emphasises that mining large, seemingly rich datasets is not always sufficient to produce meaningful or ethically valid insights. In intelligence work, contextual factors, such as an analyst’s intuitive sense, domain knowledge, and experiences with previous cases, matter greatly. AI that relies purely on quantitative data risks minimising the nuances of human experience, which are essential to comprehend for the highly consequential decisions resulting from AI-enabled analysis. Qualitative research approaches, by contrast, enable analysts to incorporate cultural, psychological, or narrative factors into intelligence analysis.

Defence Imperatives for Human-Centric Systems

There is more at stake in the Defence domain than simple business efficiencies. As Ambitious, Safe and Responsible AI notes, AI “has enormous potential to enhance capability, but it is all too often spoken about as a potential threat.” This tension between opportunity and concern stems from the high risks inherent in defence: poorly contextualised AI-enabled decisions can produce catastrophic real-world consequences. As a result, the Ministry of Defence (MOD) maintains that adopting AI responsibly must include robust evaluation methodologies that look beyond raw metrics such as precision and recall. Integrating qualitative research aligns with the ethical and safety policies explained in that paper, ensuring that human operators can confidently understand, shape, and interpret AI applications.

The Roots of Demand: Intelligence Analysis and Complexity

Documents like JSP 936, which sets requirements for dependable AI, note that new technologies must be “human-centric,” especially in contexts where the environment is too complex or fast-changing for purely rules-based logic. Intelligence analysts, typically dealing with dynamic scenarios, emphasise the importance of situational awareness and interpretive frameworks, both of which benefit from the nuance of qualitative data. When intelligence problems become more of a “mystery” (as Human-centred ways of working with AI in intelligence analysis describes) rather than just a puzzle to be solved by data, AI systems must pick up subtle signals, weigh contextual factors, and adapt to uncertain or incomplete information. Such tasks are almost impossible to accomplish solely through numeric metrics or computational pattern recognition; they demand qualitative insights derived from methods such as interviews, scenario-based reasoning, or ethnographic-style observations.

Differentiating Between Qualitative Approaches for AI Assessment and Qualitative Approaches for Intelligence Analysis

While closely related, there are two types of demand for qualitative approaches in Defence AI. Qualitative approaches to assessing AI highlight how well the system supports user tasks, whereas qualitative approaches to intelligence focus on understanding the external world of events, actors, and narratives.

Qualitative Approaches to Assess AI Applications

Purpose: Evaluate or improve the design, transparency, and deployment of AI systems themselves, focusing on aspects like user acceptance, interpretability, trustworthiness, and alignment with ethical guidelines.

Methods:

User-Centred Design interviews, think-aloud sessions, or observational studies on how analysts interact with an AI interface.

Conduct focus groups with cross-functional teams (analysts, software engineers, operators, policy experts) to discuss experiences or highlight fail points in the user experience.

Case Studies capturing lessons learned and best practices from pilot deployments of AI tools.

Outcome: A refined AI solution that more adequately fits the user’s cognitive and contextual needs, with clear guidelines on usage, training requirements, and risk mitigation measures.

Qualitative Approaches to Intelligence Analysis

Purpose: Enrich the intelligence process by incorporating non-quantifiable elements such as cultural context, psychological or behavioural dimensions of actors, narrative arcs, or socio-political undercurrents.

Methods:

Human Intelligence (HUMINT) interviews and debriefings to form or validate hypotheses about an adversary’s motivations.

Ethnographic or anthropological field work investigating local communities and networks in a theatre of operations.

Scenario Analysis sessions with intelligence professionals to foresee plausible developments in ambiguous or rapidly evolving situations.

Outcome: More holistic, context-informed intelligence judgments that fuse ‘hard data’ from AI-driven or quantitatively-focused tools with ‘soft signals’ gleaned from face-to-face interactions, situational knowledge, and interpretive frameworks.

Defence planners can ensure that each domain receives the proper skill set, methods, and oversight structures by articulating this distinction.

Core Themes in “Human-centred ways of working with AI in intelligence analysis” and Their Reflection in Other Documents

Balancing Knowledge, Psychology, and Systems

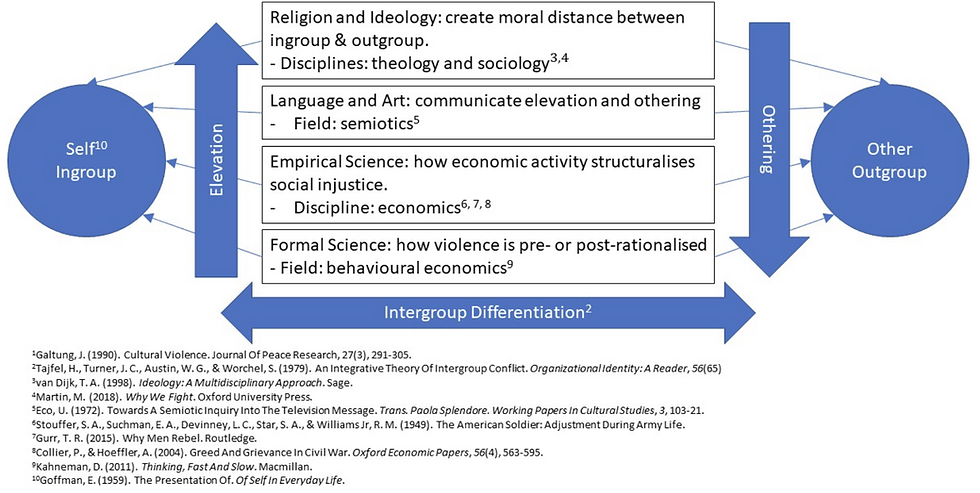

A significant insight from Human-centred ways of working with AI in intelligence analysis is that intelligence activities can be studied through three overlapping approaches: knowledge-based, psychological, and systems approaches. The idea of “human-machine teaming” incorporates the interplay of these systems.

Knowledge-based: Recognises the importance of understanding how data is transformed into information and, ultimately, into genuine insight for decision-making.

Psychological: Examines analyst biases, intuition, skill levels, and domain expertise, highlighting that human cognition cannot be reduced to purely quantitative measures.

Systems-based: This approach examines the broader environment—procedures, organisational structures, resource constraints—that shape how analysis and decisions unfold in real time.

The Defence Artificial Intelligence Strategy echoes these themes, insisting that the MOD must transform into an “AI-ready” organisation by aligning workforce culture, data governance, and technology enablers. Indeed, the strategy insists on training and upskilling staff in technical data-science techniques and “contextual thinking,” which draws heavily on qualitative approaches.

Unpredictability and Trust in AI

A recurring concern in both Ambitious, Safe and Responsible and JSP 936 is the unpredictability of certain AI algorithms in new or fluid operational contexts. Machine learning models, particularly deep-learning-based, can exhibit “black box” behaviour, which analysts struggle to interpret. Integrating qualitative approaches has the potential to foster more trust and interpretability by:

Using direct user feedback to shape the development and deployment phases. Interviews, observational studies, or focus groups can yield narratives about how and why specific AI outputs appear consistent or inconsistent with established domain knowledge.

Providing structured human-in-the-loop frameworks, where experienced analysts can critically analyse outputs generated by AI systems for rigorous decision-making.

The trust dimension is not optional: Ambitious, Safe and Responsible mandates that public and stakeholder confidence is critical for AI’s use in Defence. By incorporating robust qualitative assessments of how analysts adopt—or reject—AI-driven suggestions, programmes can highlight accountability, limit bias, and align with moral-ethical obligations.

Qualitative Methods in Action: Needles, Haystacks, and Mysteries

Human-centred ways of working with AI in intelligence analysis uses metaphors such as needles, haystacks, puzzles, and mysteries to convey the complexity inherent in intelligence work. AI can certainly help in the “needle in a haystack” scenario by scanning large volumes of data. But in the “mystery” scenario—where incomplete, shifting, and ambiguous signals must be pieced together—quantitative approaches will not suffice. A perfect data scenario does not exist, which limits the rigour of AI-generated outputs. Human knowledge, judgment and intuition fill the gaps created by imperfect data.

Ambitious, Safe and Responsible AI also tackles these complexities through a ‘systems-of-systems’ perspective, clarifying that legal, safety, and regulatory regimes must incorporate “robust, context-specific understanding,” not just top-level checklists. This context specificity can only be achieved effectively through qualitative methods, which allow each intelligence cell or command unit to adapt AI solutions to local conditions, perceived threats, and mission objectives.

Evidence of Broad Demand Across Defence

Policy Mandates and Capability Development

From the Defence Artificial Intelligence Strategy, we see a consistent call to integrate more robust, context-aware methods into AI acquisition and capability development cycles. The strategy insists that “ambitious” does not mean ignoring risk or context but operating “in a safe and responsible manner.” One cannot responsibly roll out AI for high-stakes decision-making or intelligence tasks without ensuring it meets rigorous requirements for transparency, reliability, and ethical scrutiny, dimensions where qualitative research is particularly essential.

Operational Testing and Interoperability

JSP 936 notes that “AI is abstract and will exist within some larger system to achieve effect,” urging staff to consider how systems are tested and evaluated beyond standard performance metrics. Knowledge gleaned from qualitative interviews or focus groups helps clarify how well AI tools mesh with existing workflows, user capabilities, and operational constraints. As NATO partners adopt parallel AI capabilities, alignment around these richer forms of evaluation will also promote interoperability: consistent ways of verifying trust, accountability, and data governance.

Shift Toward Human-Machine Teaming

Each document repeatedly emphasises the model of human-machine teaming. Humans excel at context, creativity, and interpretation, while machines are good at repetitive, large-scale data processing. Integrating qualitative approaches into AI systems ensures effective collaboration between humans and machines, in contrast to the reductive black-box allure of purely quantitative data models. Human-machine teaming strongly signals the demand to integrate qualitative approaches to intelligence analysis. Qualitative approaches incorporate the interpretive, reflective side of intelligence rather than adopting a purely mechanistic viewpoint.

Implications and Moving Forward

Training and Skills

A robust pipeline of skilled personnel who can conduct qualitative research is necessary. Human-centred methods of working with AI in intelligence analysis include narrative interviews, diaries, and immersive observation. Defence must train AI specialists in these methods, as it trains them in data wrangling, coding, and statistics. This combined skill set ensures that technology design, deployment, and evaluation can pivot between numeric and narrative modes of thinking, fostering a workforce comfortable with multidisciplinary collaboration.

Organisational Culture

The above documents consistently emphasise the need for cultural changes that enable broader acceptance and integration of qualitative methods. Whether ensuring users feel empowered to question AI-driven recommendations or emphasising that AI is not a final authority but a tool that can be challenged and refined, these changes require more than new workflows. They require a shift in mindset that values both quantitative and qualitative forms of evidence, thus embedding the principles of user feedback, continuous learning, and open collaboration.

Accountability and Ethics

Ethical guidelines in Ambitious, Safe and Responsible and JSP 936, which focus on context-appropriate human oversight, non-bias, reliability, and transparency, will be more robustly met when qualitative research examines how AI systems evolve in real-world environments. For instance, user narratives might reveal unforeseen biases, such as language difficulties or cultural assumptions embedded in data sets. Without targeted qualitative inquiry, these might remain hidden yet operationally significant.

The Path to Ongoing Innovation

By coupling advanced quantitative AI models with deep qualitative insights, Defence organisations can foster an innovation culture that respects both the complexities of intelligence work and the moral demands of real-world deployment. As multiple documents affirm, the mission is not to curb technological progress but to shape it responsibly so that AI systems integrate fluidly with existing intelligence practices, align with overarching legal-ethical frameworks, and sustain the credibility of Defence within the broader public sphere.

Conclusion

The demand for integrating qualitative research approaches into AI systems for Defence applications is not a peripheral concern; it is fundamental to creating truly human-centric solutions. Each of the primary documents - Human-centred ways of working with AI in intelligence analysis – Welcome to GOV.UK , Ambitious, Safe and Responsible, the Defence Artificial Intelligence Strategy, and JSP 936 - advance a similar conclusion: AI must be anchored in deeper, contextually aware, user-informed, and ethically robust approaches because quantitative approaches alone are insufficient in intelligence and Defence contexts.

A human-centric application that relies solely on quantitative methods cannot, by definition, be fully human-centric because it strips away crucial context, interpretive nuance, and ethical reflection that can only be supplied through qualitative inquiry. Integrating these two domains—quantitative data science and qualitative interpretive research—can help analysts better meet the complexities of modern defence challenges. Moreover, such an integration ensures that the fundamental values of humanity, democratic accountability, and legal-ethical responsibility remain intact when advanced AI tools are employed to protect national security.

Notwithstanding, quantitative research strives for objectivity through the use of standardised methods, statistical analysis, and measurable data. Yet complete objectivity is rarely achievable, as researchers inevitably make interpretive choices about what to measure, how to categorise variables, and how to frame results. Even numerical findings require qualitative interpretation to give them meaning within broader theoretical or social contexts.

Defence organisations will require new policies and more profound cultural shifts to integrate qualitative and quantitative approaches. They must develop the capacity to sustain participatory design processes, rely on rich user feedback loops, conduct nuanced scenario analyses, and remain vigilant to emergent ethical concerns. By doing so, they will elevate their AI systems' reliability and moral integrity, ensuring that, in the evolving landscape of intelligence analysis, technology and human insight stand side by side, each reinforcing and sharpening the other.

References

Comments